Manhattan Institute

Mark P. Mills

26 March 2019

EXECUTIVE SUMMARY

A movement has been growing for decades to replace hydrocarbons, which collectively supply 84% of the world’s energy. It began with the fear that we were running out of oil. That fear has since migrated to the belief that, because of climate change and other environmental concerns, society can no longer tolerate burning oil, natural gas, and coal—all of which have turned out to be abundant.

So far, wind, solar, and batteries—the favored alternatives to hydrocarbons—provide about 2% of the world’s energy and 3% of America’s. Nonetheless, a bold new claim has gained popularity: that we’re on the cusp of a tech-driven energy revolution that not only can, but inevitably will, rapidly replace all hydrocarbons.

This “new energy economy” rests on the belief—a centerpiece of the Green New Deal and other similar proposals both here and in Europe—that the technologies of wind and solar power and battery storage are undergoing the kind of disruption experienced in computing and communications, dramatically lowering costs and increasing efficiency. But this core analogy glosses over profound differences, grounded in physics, between systems that produce energy and those that produce information.

In the world of people, cars, planes, and factories, increases in consumption, speed, or carrying capacity cause hardware to expand, not shrink. The energy needed to move a ton of people, heat a ton of steel or silicon, or grow a ton of food is determined by properties of nature whose boundaries are set by laws of gravity, inertia, friction, mass, and thermodynamics—not clever software.

This paper highlights the physics of energy to illustrate why there is no possibility that the world is undergoing—or can undergo—a near-term transition to a “new energy economy.”

Among the reasons:

- Scientists have yet to discover, and entrepreneurs have yet to invent, anything as remarkable as hydrocarbons in terms of the combination of low-cost, high-energy density, stability, safety, and portability. In practical terms, this means that spending $1 million on utility-scale wind turbines, or solar panels will each, over 30 years of operation, produce about 50 million kilowatt-hours (kWh)—while an equivalent $1 million spent on a shale rig produces enough natural gas over 30 years to generate over 300 million kWh.

- Solar technologies have improved greatly and will continue to become cheaper and more efficient. But the era of 10-fold gains is over. The physics boundary for silicon photovoltaic (PV) cells, the Shockley-Queisser Limit, is a maximum conversion of 34% of photons into electrons; the best commercial PV technology today exceeds 26%.

- Wind power technology has also improved greatly, but here, too, no 10-fold gains are left. The physics boundary for a wind turbine, the Betz Limit, is a maximum capture of 60% of kinetic energy in moving air; commercial turbines today exceed 40%.

- The annual output of Tesla’s Gigafactory, the world’s largest battery factory, could store three minutes’ worth of annual U.S. electricity demand. It would require 1,000 years of production to make enough batteries for two days’ worth of U.S. electricity demand. Meanwhile, 50–100 pounds of materials are mined, moved, and processed for every pound of battery produced.

Introduction

A growing chorus of voices is exhorting the public, as well as government policymakers, to embrace the necessity—indeed, the inevitability—of society’s transition to a “new energy economy.” (See Peak Hydrocarbons Just Around the Corner.) Advocates claim that rapid technological changes are becoming so disruptive and renewable energy is becoming so cheap and so fast that there is no economic risk in accelerating the move to—or even mandating—a post-hydrocarbon world that no longer needs to use much, if any, oil, natural gas, or coal.

Central to that worldview is the proposition that the energy sector is undergoing the same kind of technology disruptions that Silicon Valley tech has brought to so many other markets. Indeed, “old economy” energy companies are a poor choice for investors, according to proponents of the new energy economy, because the assets of hydrocarbon companies will soon become worthless, or “stranded.”[1] Betting on hydrocarbon companies today is like betting on Sears instead of Amazon a decade ago.

“Mission Possible,” a 2018 report by an international Energy Transitions Commission, crystallized this growing body of opinion on both sides of the Atlantic.[2] To “decarbonize” energy use, the report calls for the world to engage in three “complementary” actions: aggressively deploy renewables or so-called clean tech, improve energy efficiency, and limit energy demand.

This prescription should sound familiar, as it is identical to a nearly universal energy-policy consensus that coalesced following the 1973–74 Arab oil embargo that shocked the world. But while the past half-century’s energy policies were animated by fears of resource depletion, the fear now is that burning the world’s abundant hydrocarbons releases dangerous amounts of carbon dioxide into the atmosphere.

To be sure, history shows that grand energy transitions are possible. The key question today is whether the world is on the cusp of another.

The short answer is no. There are two core flaws with the thesis that the world can soon abandon hydrocarbons. The first: physics realities do not allow energy domains to undergo the kind of revolutionary change experienced on the digital frontiers. The second: no fundamentally new energy technology has been discovered or invented in nearly a century—certainly, nothing analogous to the invention of the transistor or the Internet.

Before these flaws are explained, it is best to understand the contours of today’s hydrocarbon-based energy economy and why replacing it would be a monumental, if not an impossible, undertaking.

Moonshot Policies and the Challenge of Scale

The universe is awash in energy. For humanity, the challenge has always been to deliver energy in a useful way that is both tolerable and available when it is needed, not when nature or luck offers it. Whether it be wind or water on the surface, sunlight from above, or hydrocarbons buried deep in the earth, converting an energy source into useful power always requires capital-intensive hardware.

Considering the world’s population and the size of modern economies, scale matters. In physics, when attempting to change any system, one has to deal with inertia and various forces of resistance; it’s far harder to turn or stop a Boeing than it is a bumblebee. In a social system, it’s far more difficult to change the direction of a country than it is a local community.

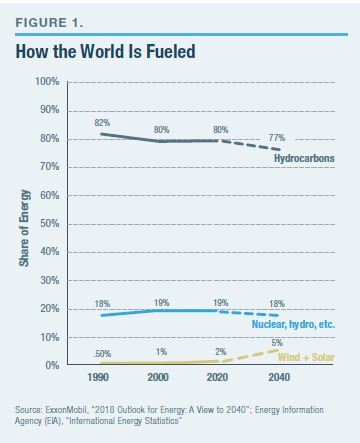

Today’s reality: hydrocarbons—oil, natural gas, and coal—supply 84% of global energy, a share that has decreased only modestly from 87% two decades ago (Figure 1).[3] Over those two decades, total world energy use rose by 50%, an amount equal to adding two entire United States’ worth of demand.[4]

The small percentage-point decline in the hydrocarbon share of world energy use required over $2 trillion in cumulative global spending on alternatives over that period.[5] Popular visuals of fields festooned with windmills and rooftops laden with solar cells don’t change the fact that these two energy sources today provide less than 2% of the global energy supply and 3% of the U.S. energy supply.

The scale challenge for any energy resource transformation begins with a description. Today, the world’s economies require an annual production of 35 billion barrels of petroleum, plus the energy equivalent of another 30 billion barrels of oil from natural gas, plus the energy equivalent of yet another 28 billion barrels of oil from coal. In visual terms: if all that fuel were in the form of oil, the barrels would form a line from Washington, D.C., to Los Angeles, and that entire line would increase in height by one Washington Monument every week.

To completely replace hydrocarbons over the next 20 years, global renewable energy production would have to increase by at least 90-fold.[6] For context: it took a half-century for global oil and gas production to expand by 10-fold.[7] It is a fantasy to think, costs aside, that any new form of energy infrastructure could now expand nine times more than that in under half the time.

If the initial goal were more modest—say, to replace hydrocarbons only in the U.S. and only those used in electricity generation—the project would require an industrial effort greater than a World War II–level of mobilization.[8] A transition to 100% non-hydrocarbon electricity by 2050 would require a U.S. grid construction program 14-fold bigger than the grid build-out rate that has taken place over the past half-century.[9] Then, to finish the transformation, this Promethean effort would need to be more than doubled to tackle nonelectric sectors, where 70% of U.S. hydrocarbons are consumed. And all that would affect a mere 16% of world energy use, America’s share.

This daunting challenge elicits a common response: “If we can put a man on the moon, surely we can [fill in the blank with any aspirational goal].” But transforming the energy economy is not like putting a few people on the moon a few times. It is like putting all of humanity on the moon—permanently.

The Physics-Driven Cost Realities of Wind and Solar

The technologies that frame the new energy economy vision distill to just three things: windmills, solar panels, and batteries.[10] While batteries don’t produce energy, they are crucial for ensuring that episodic wind and solar power is available for use in homes, businesses, and transportation.

Yet windmills and solar power are themselves not “new” sources of energy. The modern wind turbine appeared 50 years ago and was made possible by new materials, especially hydrocarbon-based fiberglass. The first commercially viable solar tech also dates back a half-century, as did the invention of the lithium battery (by an Exxon researcher).[11]

Over the decades, all three technologies have greatly improved and become roughly 10-fold cheaper.[12] Subsidies aside, that fact explains why, in recent decades, the use of wind/solar has expanded so much from a base of essentially zero.

Nonetheless, wind, solar, and battery tech will continue to become better, within limits. Those limits matter a great deal—about which, more later—because of the overwhelming demand for power in the modern world and the realities of energy sources on offer from Mother Nature.

With today’s technology, $1 million worth of utility-scale solar panels will produce about 40 million kilowatt-hours (kWh) over a 30-year operating period (Figure 2). A similar metric is true for wind: $1 million worth of a modern wind turbine produces 55 million kWh over the same 30 years.[13] Meanwhile, $1 million worth of hardware for a shale rig will produce enough natural gas over 30 years to generate over 300 million kWh.[14] That constitutes about 600% more electricity for the same capital spent on primary energy-producing hardware.[15]

The fundamental differences between these energy resources can also be illustrated in terms of individual equipment. For the cost to drill a single shale well, one can build two 500-foot-high, 2-megawatt (MW) wind turbines. Those two wind turbines produce a combined output averaging over the years to the energy equivalent of 0.7 barrels of oil per hour. The same money spent on a single shale rig produces 10 barrels of oil, per hour, or its energy equivalent in natural gas, averaged over the decades.[16]

The huge disparity in output arises from the inherent differences in energy densities that are features of nature immune to public aspiration or government subsidy. The high energy density of the physical chemistry of hydrocarbons is unique and well understood, as is the science underlying the low energy density inherent in surface sunlight, wind volumes, and velocity.[17] Regardless of what governments dictate that utilities pay for that output, the quantity of energy produced is determined by how much sunlight or wind is available over any period of time and the physics of the conversion efficiencies of photovoltaic cells or wind turbines.

These kinds of comparisons between wind, solar, and natural gas illustrate the starting point in making a raw energy resource useful. But for any form of energy to become a primary source of power, additional technology is required. For gas, one necessarily spends money on a turbo-generator to convert the fuel into grid electricity. For wind/solar, spending is required for some form of storage to convert episodic electricity into utility-grade, 24/7 power.

The high cost of ensuring energy availability

Availability is the single most critical feature of any energy infrastructure, followed by price, followed by the eternal search for decreasing costs without affecting availability. Until the modern energy era, economic and social progress had been hobbled by the episodic nature of energy availability. That’s why, so far, more than 90% of America’s electricity, and 99% of the power used in transportation, comes from sources that can easily supply energy any time on demand.[18]

In our data-centric, increasingly electrified, society, always-available power is vital. But, as with all things, physics constrains the technologies and the costs for supplying availability.[19] For hydrocarbon-based systems, availability is dominated by the cost of equipment that can convert fuel-to-power continuously for at least 8,000 hours a year, for decades. Meanwhile, it’s inherently easy to store the associated fuel to meet expected or unexpected surges in demand, or delivery failures in the supply chain caused by weather or accidents.

It costs less than $1 a barrel to store oil or natural gas (in oil-energy equivalent terms) for a couple of months.[20] Storing coal is even cheaper. Thus, unsurprisingly, the U.S., on average, has about one to two months’ worth of national demand in storage for each kind of hydrocarbon at any given time.[21]

Meanwhile, with batteries, it costs roughly $200 to store the energy equivalent to one barrel of oil.[22] Thus, instead of months, barely two hours of national electricity demand can be stored in the combined total of all the utility-scale batteries on the grid plus all the batteries in the 1 million electric cars that exist today in America.[23]

For wind/solar, the features that dominate cost of availability are inverted, compared with hydrocarbons. While solar arrays and wind turbines do wear out and require maintenance as well, the physics and thus additional costs of that wear-and-tear are less challenging than with combustion turbines. But the complex and comparatively unstable electrochemistry of batteries makes for an inherently more expensive and less efficient way to store energy and ensure its availability.

Since hydrocarbons are so easily stored, idle conventional power plants can be dispatched—ramped up and down—to follow cyclical demand for electricity. Wind turbines and solar arrays cannot be dispatched when there’s no wind or sun. As a matter of geophysics, both wind-powered and sunlight-energized machines produce energy, averaged over a year, about 25%–30% of the time, often less.[24] Conventional power plants, however, have very high “availability,” in the 80%–95% range, and often higher.[25]

A wind/solar grid would need to be sized to meet both peak demand and to have enough extra capacity beyond peak needs in order to produce and store additional electricity when sun and wind are available. This means, on average, that a pure wind/solar system would necessarily have to be about threefold the capacity of a hydrocarbon grid: i.e., one needs to build 3 kW of wind/solar equipment for every 1 kW of combustion equipment eliminated. That directly translates into a threefold cost disadvantage, even if the per-kW costs were all the same.[26]

Even this necessary extra capacity would not suffice. Meteorological and operating data show that average monthly wind and solar electricity output can drop as much as twofold during each source’s respective “low” season.[27]

The myth of grid parity

How do these capacity and cost disadvantages square with claims that wind and solar are already at or near “grid parity” with conventional sources of electricity? The U.S. Energy Information Agency (EIA) and other similar analyses report a “levelized cost of energy” (LCOE) for all types of electric power technologies. In the EIA’s LCOE calculations, electricity from a wind turbine or solar array is calculated as 36% and 46%, respectively, more expensive than from a natural-gas turbine—i.e., approaching parity.[28] But in a critical and rarely noted caveat, EIA states: “The LCOE values for dispatchable and non-dispatchable technologies are listed separately in the tables because comparing them must be done carefully”[29] (emphasis added). Put differently, the LCOE calculations do not take into account the array of real, if hidden, costs needed to operate a reliable 24/7 and 365-day-per-year energy infrastructure—or, in particular, a grid that used only wind/solar.

The LCOE considers the hardware in isolation while ignoring real-world system costs essential to supply 24/7 power. Equally misleading, an LCOE calculation, despite its illusion of precision, relies on a variety of assumptions and guesses subject to dispute, if not bias.

For example, an LCOE assumes that the future cost of competing fuels—notably, natural gas—will rise significantly. But that means that the LCOE is more of a forecast than a calculation. This is important because a “levelized cost” uses such a forecast to calculate a purported average cost over a long period. The assumption that gas prices will go up is at variance with the fact that they have decreased over the past decade and the evidence that low prices are the new normal for the foreseeable future.[30] Adjusting the LCOE calculation to reflect a future where gas prices don’t rise radically increases the LCOE cost advantage of natural gas over wind/solar.

An LCOE incorporates an even more subjective feature, called the “discount rate,” which is a way of comparing the value of money today versus the future. A low discount rate has the effect of tilting an outcome to make it more appealing to spend precious capital today to solve a future (theoretical) problem. Advocates of using low discount rates are essentially assuming slow economic growth.[31]

A high discount rate effectively assumes that a future society will be far richer than today (not to mention have better technology).[32] Economist William Nordhaus’s work in this field, wherein he advocates using a high discount rate, earned him a 2018 Nobel Prize.

An LCOE also requires an assumption about average multi-decade capacity factors, the share of time the equipment actually operates (i.e., the real, not theoretical, amount of time the sun shines and wind blows). EIA assumes, for example, 41% and 29% capacity factors, respectively, for wind and solar. But data collected from operating wind and solar farms reveal actual median capacity factors of 33% and 22%.[33] The difference between assuming a 40% but experiencing a 30% capacity factor means that, over the 20-year life of a 2-MW wind turbine, $3 million of energy production assumed in the financial models won’t exist—and that’s for a turbine with an initial capital cost of about $3 million.

U.S. wind-farm capacity factors have been getting better but at a slow rate of about 0.7% per year over the past two decades.[34] Notably, this gain was achieved mainly by reducing the number of turbines per acre trying to scavenge moving air—resulting in average land used per unit of wind energy increasing by some 50%.

LCOE calculations do reasonably include costs for such things as taxes, the cost of borrowing, and maintenance. But here, too, mathematical outcomes give the appearance of precision while hiding assumptions. For example, assumptions about maintenance costs and performance of wind turbines over the long term may be overly optimistic. Data from the U.K., which is further down the wind-favored path than the U.S., point to far faster degradation (less electricity per turbine) than originally forecast.[35]

To address at least one issue with using LCOE as a tool, the International Energy Agency (IEA) recently proposed the idea of a “value-adjusted” LCOE, or VALCOE, to include the elements of flexibility and incorporate the economic implications of dispatchability. IEA calculations using a VALCOE method yielded coal power, for example, far cheaper than solar, with a cost penalty widening as a grid’s share of solar generation rises.[36]

One would expect that, long before a grid is 100% wind/solar, the kinds of real costs outlined above should already be visible. As it happens, regardless of putative LCOEs, we do have evidence of the economic impact that arises from increasing the use of wind and solar energy.

The Hidden Costs of a “Green” Grid

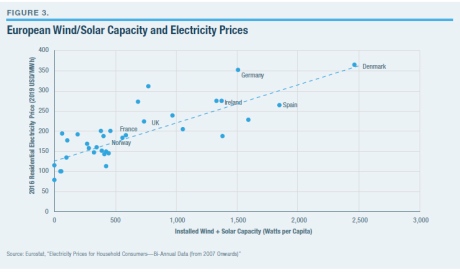

Subsidies, tax preferences, and mandates can hide real-world costs, but when enough of them accumulate, the effect should be visible in overall system costs. And it is. In Europe, the data show that the higher the share of wind/solar, the higher the average cost of grid electricity (Figure 3).

Germany and Britain, well down the “new energy” path, have seen average electricity rates rise 60%–110% over the past two decades.[37] The same pattern—more wind/solar and higher electricity bills—is visible in Australia and Canada.[38]

Since the share of wind power, on a per-capita basis, in the U.S. is still at only a small fraction of that in most of Europe, the cost impacts on American ratepayers are less dramatic and less visible. Nonetheless, average U.S. residential electric costs have risen some 20% over the past 15 years.[39] That should not have been the case. Average electric rates should have gone down, not up.

Here’s why: coal and natural gas together supplied about 70% of electricity over that 15-year period.[40] The price of fuel accounts for about 60%–70% of the cost to produce electricity when using hydrocarbons.[41] Thus, about half the average cost of America’s electricity depends on coal and gas prices. The price of both those fuels has gone down by over 50% over that 15-year period. Utility costs, specifically, to purchase gas and coal are down some 25% over the past decade alone. In other words, cost savings from the shale-gas revolution have significantly insulated consumers, so far, from even higher rate increases.

The increased use of wind/solar imposes a variety of hidden, physics-based costs that are rarely acknowledged in utility or government accounting. For example, when large quantities of power are rapidly, repeatedly, and unpredictably cycled up and down, the challenge and costs associated with “balancing” a grid (i.e., keeping it from failing) are greatly increased. OECD analysts estimate that at least some of those “invisible” costs imposed on the grid add 20%–50% to the cost of grid kilowatt-hours.[42]

Furthermore, flipping the role of the grid’s existing power plants from primary to backup for wind/solar leads to other real but unallocated costs that emerge from physical realities. Increased cycling of conventional power plants increases wear-and-tear and maintenance costs. It also reduces the utilization of those expensive assets, which means that capital costs are spread out over fewer kWh produced—thereby arithmetically increasing the cost of each of those kilowatt-hours.[43]

Then, if the share of episodic power becomes significant, the potential rises for complete system blackouts. That has happened twice after the wind died down unexpectedly (with some customers out for days in some areas) in the state of South Australia, which derives over 40% of its electricity from wind.[44]

After a total system outage in South Australia in 2018, Tesla, with much media fanfare, installed the world’s single largest lithium battery “farm” on that grid.[45] For context, to keep South Australia lit for one half-day of no wind would require 80 such “world’s biggest” Tesla battery farms, and that’s on a grid that serves just 2.5 million people.

Engineers have other ways to achieve reliability; using old-fashioned giant diesel-engine generators as backup (engines essentially the same as those that propel cruise ships or that are used to back up data centers). Without fanfare, because of rising use of wind, U.S. utilities have been installing grid-scale engines at a furious pace. The grid now has over $4 billion in utility-scale, engine-driven generators (enough for about 100 cruise ships), with lots more to come. Most burn natural gas, though a lot of them are oil-fired. Three times as many such big reciprocating engines have been added to America’s grid over the past two decades as over the half-century prior to that.[46]

All these costs are real and are not allocated to wind or solar generators. But electricity consumers pay them. A way to understand what’s going on: managing grids with hidden costs imposed on non-favored players would be like levying fees on car drivers for the highway wear-and-tear caused by heavy trucks while simultaneously subsidizing the cost of fueling those trucks.

The issue with wind and solar power comes down to a simple point: their usefulness is impractical on a national scale as a major or primary fuel source for generating electricity. As with any technology, pushing the boundaries of practical utilization is possible but usually not sensible or cost-effective. Helicopters offer an instructive analogy.

The development of a practical helicopter in the 1950s (four decades after its invention) inspired widespread hyperbole about that technology revolutionizing personal transportation. Today, the manufacture and use of helicopters is a multibillion-dollar niche industry providing useful and often-vital services. But one would no more use helicopters for regular Atlantic travel—though doable with elaborate logistics—than employ a nuclear reactor to power a train or photovoltaic systems to power a country.

Batteries Cannot Save the Grid or the Planet

Batteries are a central feature of new energy economy aspirations. It would indeed revolutionize the world to find a technology that could store electricity as effectively and cheaply as, say, oil in a barrel, or natural gas in an underground cavern.[47] Such electricity-storage hardware would render it unnecessary even to build domestic power plants. One could imagine an OKEC (Organization of Kilowatt-Hour Exporting Countries) that shipped barrels of electrons around the world from nations where the cost to fill those “barrels” was lowest; solar arrays in the Sahara, coal mines in Mongolia (out of reach of Western regulators), or the great rivers of Brazil.

But in the universe that we live in, the cost to store energy in grid-scale batteries is, as earlier noted, about 200-fold more than the cost to store natural gas to generate electricity when it’s needed.[48] That’s why we store, at any given time, months’ worth of national energy supply in the form of natural gas or oil.

Battery storage is quite another matter. Consider Tesla, the world’s best-known battery maker: $200,000 worth of Tesla batteries, which collectively weigh over 20,000 pounds, are needed to store the energy equivalent of one barrel of oil.[49] A barrel of oil, meanwhile, weighs 300 pounds and can be stored in a $20 tank. Those are the realities of today’s lithium batteries. Even a 200% improvement in underlying battery economics and technology won’t close such a gap.

Nonetheless, policymakers in America and Europe enthusiastically embrace programs and subsidies to vastly expand the production and use of batteries at grid scale.[50] Astonishing quantities of batteries will be needed to keep country-level grids energized—and the level of mining required for the underlying raw materials would be epic. For the U.S., at least, given where the materials are mined and where batteries are made, imports would increase radically. Perspective on each of these realities follows.

How many batteries would it take to light the nation?

A grid based entirely on wind and solar necessitates going beyond preparation for the normal daily variability of wind and sun; it also means preparation for the frequency and duration of periods when there would be not only far less wind and sunlight combined but also for periods when there would be none of either. While uncommon, such a combined event—daytime continental cloud cover with no significant wind anywhere, or nighttime with no wind—has occurred more than a dozen times over the past century—effectively, once every decade. On these occasions, a combined wind/solar grid would not be able to produce a tiny fraction of the nation’s electricity needs. There have also been frequent one-hour periods when 90% of the national electric supply would have disappeared.[51]

So how many batteries would be needed to store, say, not two months’ but two days’ worth of the nation’s electricity? The $5 billion Tesla “Gigafactory” in Nevada is currently the world’s biggest battery manufacturing facility.[52] Its total annual production could store three minutes’ worth of annual U.S. electricity demand. Thus, in order to fabricate a quantity of batteries to store two days’ worth of U.S. electricity demand would require 1,000 years of Gigafactory production.

Wind/solar advocates propose to minimize battery usage with enormously long transmission lines on the observation that it is always windy or sunny somewhere. While theoretically feasible (though not always true, even at country-level geographies), the length of transmission needed to reach somewhere “always” sunny/windy also entails substantial reliability and security challenges. (And long-distance transport of energy by wire is twice as expensive as by pipeline.)[53]

Building massive quantities of batteries would have epic implications for mining

A key rationale for the pursuit of a new energy economy is to reduce environmental externalities from the use of hydrocarbons. While the focus these days is mainly on the putative long-term effects of carbon dioxide, all forms of energy production entail various unregulated externalities inherent in extracting, moving, and processing minerals and materials.

Radically increasing battery production will dramatically affect mining, as well as the energy used to access, process, and move minerals and the energy needed for the battery fabrication process itself. About 60 pounds of batteries are needed to store the energy equivalent to that in one pound of hydrocarbons. Meanwhile, 50–100 pounds of various materials are mined, moved, and processed for one pound of battery produced.[54] Such underlying realities translate into enormous quantities of minerals—such as lithium, copper, nickel, graphite, rare earths, and cobalt—that would need to be extracted from the earth to fabricate batteries for grids and cars.[55] A battery-centric future means a world mining gigatons more materials.[56] And this says nothing about the gigatons of materials needed to fabricate wind turbines and solar arrays, too.[57]

Even without a new energy economy, the mining required to make batteries will soon dominate the production of many minerals. Lithium battery production today already accounts for about 40% and 25%, respectively, of all lithium and cobalt mining.[58] In an all-battery future, global mining would have to expand by more than 200% for copper, by at least 500% for minerals like lithium, graphite, and rare earths, and far more than that for cobalt.[59]

Then there are the hydrocarbons and electricity needed to undertake all the mining activities and to fabricate the batteries themselves. In rough terms, it requires the energy equivalent of about 100 barrels of oil to fabricate a quantity of batteries that can store a single barrel of oil-equivalent energy.[60]

Given the regulatory hostility to mining on the U.S. continent, a battery-centric energy future virtually guarantees more mining elsewhere and rising import dependencies for America. Most of the relevant mines in the world are in Chile, Argentina, Australia, Russia, the Congo, and China. Notably, the Democratic Republic of Congo produces 70% of global cobalt, and China refines 40% of that output for the world.[61]

China already dominates global battery manufacturing and is on track to supply nearly two-thirds of all production by 2020.[62] The relevance for the new energy economy vision: 70% of China’s grid is fueled by coal today and will still be at 50% in 2040.[63] This means that, over the life span of the batteries, there would be more carbon-dioxide emissions associated with manufacturing them than would be offset by using those batteries to, say, replace internal combustion engines.[64]

Transforming personal transportation from hydrocarbon-burning to battery-propelled vehicles is another central pillar of the new energy economy. Electric vehicles (EVs) are expected not only to replace petroleum on the roads but to serve as backup storage for the electric grid as well.[65]

Lithium batteries have finally enabled EVs to become reasonably practical. Tesla, which now sells more cars in the top price category in America than does Mercedes-Benz, has inspired a rush of the world’s manufacturers to produce appealing battery-powered vehicles.[66] This has emboldened bureaucratic aspirations for outright bans on the sale of internal combustion engines, notably in Germany, France, Britain, and, unsurprisingly, California.

Such a ban is not easy to imagine. Optimists forecast that the number of EVs in the world will rise from today’s nearly 4 million to 400 million in two decades.[67] A world with 400 million EVs by 2040 would decrease global oil demand by barely 6%. This sounds counterintuitive, but the numbers are straightforward. There are about 1 billion automobiles today, and they use about 30% of the world’s oil.[68] (Heavy trucks, aviation, petrochemicals, heat, etc. use the rest.) By 2040, there would be an estimated 2 billion cars in the world. Four hundred million EVs would amount to 20% of all the cars on the road—which would thus replace about 6% of petroleum demand.

In any event, batteries don’t represent a revolution in personal mobility equivalent to, say, going from the horse-and-buggy to the car—an analogy that has been invoked.[69] Driving an EV is more analogous to changing what horses are fed and importing the new fodder.

Moore’s Law Misapplied

Faced with all the realities outlined above regarding green technologies, new energy economy enthusiasts nevertheless believe that true breakthroughs are yet to come and are even inevitable. That’s because, so it is claimed, energy tech will follow the same trajectory as that seen in recent decades with computing and communications. The world will yet see the equivalent of an Amazon or “Apple of clean energy.”[70]

This idea is seductive because of the astounding advances in silicon technologies that so few forecasters anticipated decades ago. It is an idea that renders moot any cautions that wind/solar/batteries are too expensive today—such caution is seen as foolish and shortsighted, analogous to asserting, circa 1980, that the average citizen would never be able to afford a computer. Or saying, in 1984 (the year that the world’s first cell phone was released), that a billion people would own a cell phone, when it cost $9,000 (in today’s dollars). It was a two-pound “brick” with a 30-minute talk time.

Today’s smartphones are not only far cheaper; they are far more powerful than a room-size IBM mainframe from 30 years ago. That transformation arose from engineers inexorably shrinking the size and energy appetite of transistors, and consequently increasing their number per chip roughly twofold every two years—the “Moore’s Law” trend, named for Intel cofounder Gordon Moore.

The compound effect of that kind of progress has indeed caused a revolution. Over the past 60 years, Moore’s Law has seen the efficiency of how logic engines use energy improve by over a billionfold.[71] But a similar transformation in how energy is produced or stored isn’t just unlikely; it can’t happen with the physics we know today.

In the world of people, cars, planes, and large-scale industrial systems, increasing speed or carrying capacity causes hardware to expand, not shrink. The energy needed to move a ton of people, heat a ton of steel or silicon, or grow a ton of food is determined by properties of nature whose boundaries are set by laws of gravity, inertia, friction, mass, and thermodynamics.

If combustion engines, for example, could achieve the kind of scaling efficiency that computers have since 1971—the year the first widely used integrated circuit was introduced by Intel—a car engine would generate a thousandfold more horsepower and shrink to the size of an ant.[72] With such an engine, a car could actually fly, very fast.

If photovoltaics scaled by Moore’s Law, a single postage-stamp-size solar array would power the Empire State Building. If batteries scaled by Moore’s Law, a battery the size of a book, costing three cents, could power an A380 to Asia.

But only in the world of comic books does the physics of propulsion or energy production work like that. In our universe, power scales the other way.

An ant-size engine—which has been built—produces roughly 100,000 times lesspower than a Prius. An ant-size solar PV array (also feasible) produces a thousandfold less energy than an ant’s biological muscles. The energy equivalent of the aviation fuel actually used by an aircraft flying to Asia would take $60 million worth of Tesla-type batteries weighing five times more than that aircraft.[73]

The challenge in storing and processing information using the smallest possible amount of energy is distinct from the challenge of producing energy, or of moving or reshaping physical objects. The two domains entail different laws of physics.

The world of logic is rooted in simply knowing and storing the fact of the binary state of a switch—i.e., whether it is on or off. Logic engines don’t produce physical action but are designed to manipulate the idea of the numbers zero and one. Unlike engines that carry people, logic engines can use software to do things such as compress information through clever mathematics and thus reduce energy use. No comparable compression options exist in the world of humans and hardware.

Of course, wind turbines, solar cells, and batteries will continue to improve significantly in cost and performance; so will drilling rigs and combustion turbines (a subject taken up next). And, of course, Silicon Valley information technology will bring important, even dramatic, efficiency gains in the production and management of energy and physical goods (a prospect also taken up below). But the outcomes won’t be as miraculous as the invention of the integrated circuit, or the discovery of petroleum or nuclear fission.

Sliding Down the Renewable Asymptote

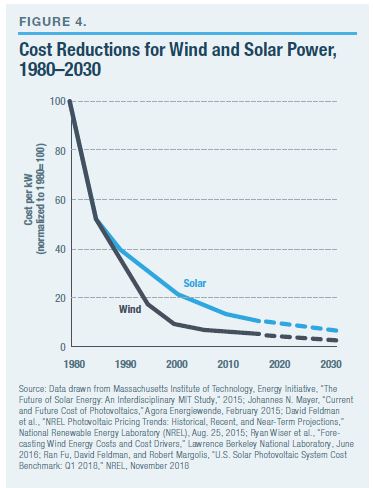

Forecasts for a continual rapid decline in costs for wind/solar/batteries are inspired by the gains that those technologies have already experienced. The first two decades of commercialization, after the 1980s, saw a 10-fold reduction in costs. But the path for improvements now follows what mathematicians call an asymptote; or, put in economic terms, improvements are subject to a law of diminishing returns where every incremental gain yields less progress than in the past (Figure 4).

This is a normal phenomenon in all physical systems. Throughout history, engineers have achieved big gains in the early years of a technology’s development, whether wind or gas turbines, steam or sailing ships, internal combustion or photovoltaic cells. Over time, engineers manage to approach nature’s limits. Bragging rights for gains in efficiency—or speed, or other equivalent metrics such as energy density (power per unit of weight or volume) then shrink from double-digit percentages to fractional percentage changes. Whether it’s solar, wind tech, or aircraft turbines, the gains in performance are now all measured in single-digit percentage gains. Such progress is economically meaningful but is not revolutionary.

The physics-constrained limits of energy systems are unequivocal. Solar arrays can’t convert more photons than those that arrive from the sun. Wind turbines can’t extract more energy than exists in the kinetic flows of moving air. Batteries arebound by the physical chemistry of the molecules chosen. Similarly, no matter how much better jet engines become, an A380 will never fly to the moon. An oil-burning engine can’t produce more energy than what is contained in the physical chemistry of hydrocarbons.

Combustion engines have what’s called a Carnot Efficiency Limit, which is anchored in the temperature of combustion and the energy available in the fuel. The limits are long established and well understood. In theory, at a high enough temperature, 80% of the chemical energy that exists in the fuel can be turned into power.[74] Using today’s high-temperature materials, the best hydrocarbon engines convert about 50%–60% to power. There’s still room to improve but nothing like the 10-fold to nearly hundredfold revolutionary advances achieved in the first couple of decades after their invention. Wind/solar technologies are now on the same place of that asymptotic technology curve.

For wind, the boundary is called the Betz Limit, which dictates how much of the kinetic energy in air a blade can capture; that limit is about 60%.[75] Capturing all the kinetic energy would mean, by definition, no air movement and thus nothing to capture. There needs to be wind for the turbine to turn. Modern turbines already exceed 45% conversion.[76] That leaves some real gains to be made but, as with combustion engines, nothing revolutionary.[77] Another 10-fold improvement is not possible.

For silicon photovoltaic (PV) cells, the physics boundary is called the Shockley-Queisser Limit: a maximum of about 33% of incoming photons are converted into electrons. State-of-the-art commercial PVs achieve just over 26% conversion efficiency—in other words, near the boundary. While researchers keep unearthing new non-silicon options that offer tantalizing performance improvements, all have similar physics boundaries, and none is remotely close to manufacturability at all—never mind at low costs.[78] There are no 10-fold gains left.[79]

Future advances in wind turbine and solar economics are now centered on incremental engineering improvements: economies of scale in making turbines enormous, taller than the Washington Monument, and similarly massive, square-mile utility-scale solar arrays. For both technologies, all the underlying key components—concrete, steel, and fiberglass for wind; and silicon, copper, and glass for solar—are all already in mass production and well down asymptotic cost curves in their own domains.

While there are no surprising gains in economies of scale available in the supply chain, that doesn’t mean that costs are immune to improvements. In fact, all manufacturing processes experience continual improvements in production efficiency as volumes rise. This experience curve is called Wright’s Law. (That “law” was first documented in 1936, as it related then to the challenge of manufacturing aircraft at costs that markets could tolerate. Analogously, while aviation took off and created a big, worldwide transportation industry, it didn’t eliminate automobiles, or the need for ships.) Experience leading to lower incremental costs is to be expected; but, again, that’s not the kind of revolutionary improvement that could make a new energy economy even remotely plausible.

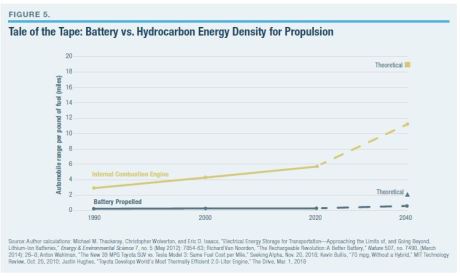

As for modern batteries, there are still promising options for significant improvements in their underlying physical chemistry. New non-lithium materials in research labs offer as much as a 200% and even 300% gain in inherent performance.[80] Such gains nevertheless don’t constitute the kinds of 10-fold or hundredfold advances in the early days of combustion chemistry.[81] Prospective improvements will still leave batteries miles away from the real competition: petroleum.

There are no subsidies and no engineering from Silicon Valley or elsewhere that can close the physics-centric gap in energy densities between batteries and oil (Figure 5). The energy stored per pound is the critical metric for vehicles and, especially, aircraft. The maximum potential energy contained in oil molecules is about 1,500% greater, pound for pound, than the maximum in lithium chemistry.[82] That’s why the aircraft and rockets are powered by hydrocarbons. And that’s why a 20% improvement in oil propulsion (eminently feasible) is more valuable than a 200% improvement in batteries (still difficult).

Finally, when it comes to limits, it is relevant to note that the technologies that unlocked shale oil and gas are still in the early days of engineering development, unlike the older technologies of wind, solar, and batteries. Tenfold gains are still possible in terms of how much energy can be extracted by a rig from shale rock before approaching physics limits.[83] That fact helps explain why shale oil and gas have added 2,000% more to U.S. energy production over the past decade than have wind and solar combined.[84]

Digitalization Won’t Uberize the Energy Sector

Digital tools are already improving and can further improve all manner of efficiencies across entire swaths of the economy, and it is reasonable to expect that software will yet bring significant improvements in both the underlying efficiency of wind/solar/battery machines and in the efficiency of how such machines are integrated into infrastructures. Silicon logic has improved, for example, the control and thus the fuel efficiency of combustion engines, and it is doing the same for wind turbines. Similarly, software epitomized by Uber has shown that optimizing the efficiency of using expensive transportation assets lowers costs. Uberizing all manner of capital assets is inevitable.

Uberizing the electric grid without hydrocarbons is another matter entirely.

The peak demand problem that software can’t fix

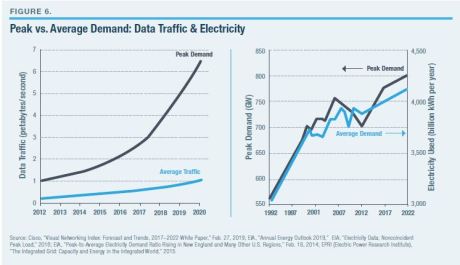

In the energy world, one of the most vexing problems is in optimally matching electricity supply and demand (Figure 6). Here the data show that society and the electricity-consuming services that people like are generating a growing gap between peaks and valleys of demand. The net effect for a hydrocarbon-free grid will be to increase the need for batteries to meet those peaks.

All this has relevance for encouraging EVs. In terms of managing the inconvenient cyclical nature of demand, shifting transportation fuel use from oil to the grid will make peak management far more challenging. People tend to refuel when it’s convenient; that’s easy to accommodate with oil, given the ease of storage. EV refueling will exacerbate the already-episodic nature of grid demand.

To ameliorate this problem, one proposal is to encourage or even require off-peak EV fueling.[85] The jury is out on just how popular that will be or whether it will even be tolerated.

Although kilowatt-hours and cars—key targets in the new energy economy prescriptions—constitute only 60% of the energy economy, global demand for both is centuries away from saturation. Green enthusiasts make extravagant claims about the effect of Uber-like options and self-driving cars. However, the data show that the economic efficiencies from Uberizing have so far increased the use of cars and peak urban congestion.[86] Similarly, many analysts now see autonomous vehicles amplifying, not dampening, that effect.[87]

That’s because people, and thus markets, are focused on economic efficiency and not on energy efficiency. The former can be associated with reducing energy use; but it is also, and more often, associated with increased energy demand. Cars use more energy per mile than a horse, but the former offers enormous gains in economic efficiency. Computers, similarly, use far more energy than pencil-and-paper.

Uberizing improves energy efficiencies but increases demand

Every energy conversion in our universe entails built-in inefficiencies—converting heat to propulsion, carbohydrates to motion, photons to electrons, electrons to data, and so forth. All entail a certain energy cost, or waste, that can be reduced but never eliminated. But, in no small irony, history shows—as economists have often noted—that improvements in efficiency lead to increased, not decreased, energy consumption.

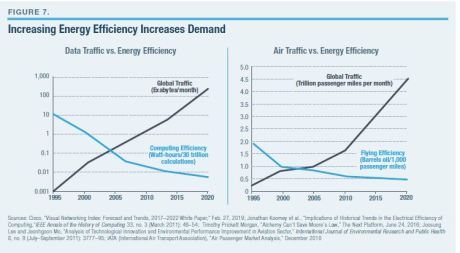

If at the dawn of the modern era, affordable steam engines had remained as inefficient as those first invented, they would never have proliferated, nor would the attendant economic gains and the associated rise in coal demand have happened. We see the same thing with modern combustion engines. Today’s aircraft, for example, are three times as energy-efficient as the first commercial passenger jets in the 1950s.[88] That didn’t reduce fuel use but propelled air traffic to soar and, with it, a fourfold rise in jet fuel burned.[89]

Similarly, it was the astounding gains in computing’s energy efficiency that drove the meteoric rise in data traffic on the Internet—which resulted in far more energy used by computing. Global computing and communications, all told, now consumes the energy equivalent of 3 billion barrels of oil per year, more energy than global aviation.[90]

The purpose of improving efficiency in the real world, as opposed to the policy world, is to reduce the cost of enjoying the benefits from an energy-consuming engine or machine. So long as people and businesses want more of the benefits, declining cost leads to increased demand that, on average, outstrips any “savings” from the efficiency gains. Figure 7 shows how this efficiency effect has played out for computing and air travel.[91]

Of course, the growth in demand growth for a specific product or service can subside in a (wealthy) society when limits are hit: the amount of food a person can eat, the miles per day an individual is willing to drive, the number of refrigerators or lightbulbs per household, etc. But a world of 8 billion people is a long way from reaching any such limits.

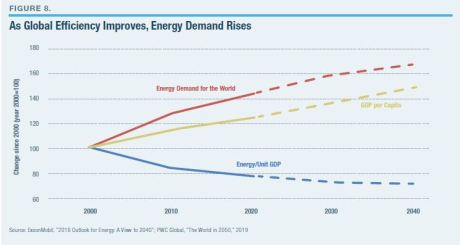

The macro picture of the relationship between efficiency and world energy demand is clear (Figure 8). Technology has continually improved society’s energy efficiency. But far from ending global energy growth, efficiency has enabled it. The improvements in cost and efficiency brought about through digital technologies will accelerate, not end, that trend.

Energy Revolutions Are Still Beyond the Horizon

When the world’s poorest 4 billion people increase their energy use to just 15% of the per-capita level of developed economies, global energy consumption will rise by the equivalent of adding an entire United States’ worth of demand.[92] In the face of such projections, there are proposals that governments should constrain demand, and even ban certain energy-consuming behaviors. One academic article proposed that the “sale of energy-hungry versions of a device or an application could be forbidden on the market, and the limitations could become gradually stricter from year to year, to stimulate energy-saving product lines.”[93] Others have offered proposals to “reduce dependency on energy” by restricting the sizes of infrastructures or requiring the use of mass transit or car pools.[94]

The issue here is not only that poorer people will inevitably want to—and will be able to—live more like wealthier people but that new inventions continually create new demands for energy. The invention of the aircraft means that every $1 billion in new jets produced leads to some $5 billion in aviation fuel consumed over two decades to operate them. Similarly, every $1 billion in data centers built will consume $7 billion in electricity over the same period.[95] The world is buying both at the rate of about $100 billion a year.[96]

The inexorable march of technology progress for things that use energy creates the seductive idea that something radically new is also inevitable in ways to produce energy. But sometimes, the old or established technology is the optimal solution and nearly immune to disruption. We still use stone, bricks, and concrete, all of which date to antiquity. We do so because they’re optimal, not “old.” So are the wheel, water pipes, electric wires … the list is long. Hydrocarbons are, so far, optimal ways to power most of what society needs and wants.

More than a decade ago, Google focused its vaunted engineering talent on a project called “RE<C,” seeking to develop renewable energy cheaper than coal. After the project was canceled in 2014, Google’s lead engineers wrote: “Incremental improvements to existing [energy] technologies aren’t enough; we need something truly disruptive… We don’t have the answers.”[97] Those engineers rediscovered the kinds of physics and scale realities highlighted in this paper.

An energy revolution will come only from the pursuit of basic sciences. Or, as Bill Gates has phrased it, the challenge calls for scientific “miracles.”[98] These will emerge from basic research, not from subsidies for yesterday’s technologies. The Internet didn’t emerge from subsidizing the dial-up phone, or the transistor from subsidizing vacuum tubes, or the automobile from subsidizing railroads.

However, 95% of private-sector R&D spending and the majority of government R&D is directed at “development” and not basic research.[99] If policymakers want a revolution in energy tech, the single most important action would be to radically refocus and expand support for basic scientific research.

Hydrocarbons—oil, natural gas, and coal—are the world’s principal energy resource today and will continue to be so in the foreseeable future. Wind turbines, solar arrays, and batteries, meanwhile, constitute a small source of energy, and physics dictates that they will remain so. Meanwhile, there is simply no possibility that the world is undergoing—or can undergo—a near-term transition to a “new energy economy.”

Manhattan Institute

Views: 156

Comment

-

Comment by Willem Post on April 22, 2019 at 8:19am

-

Arthus,

Thank you for posting this.

Finally a summary of all the issues involved regarding going all-out for wind and solar.

All these issues I have covered in my articles over the years.

This is the best and most realistic summary BY FAR

© 2025 Created by Webmaster.

Powered by

![]()

You need to be a member of Citizens' Task Force on Wind Power - Maine to add comments!

Join Citizens' Task Force on Wind Power - Maine